Outages

File sharing and collaboration service Box fell victim to an extended outage that affected all of its services on Friday

How not to share a root cause analysis: Lessons from Australia's Optus and Canada's Rogers...

Two outages back-to-back came the day of OpenAI's new models and services launch and appear to have grown more severe today...

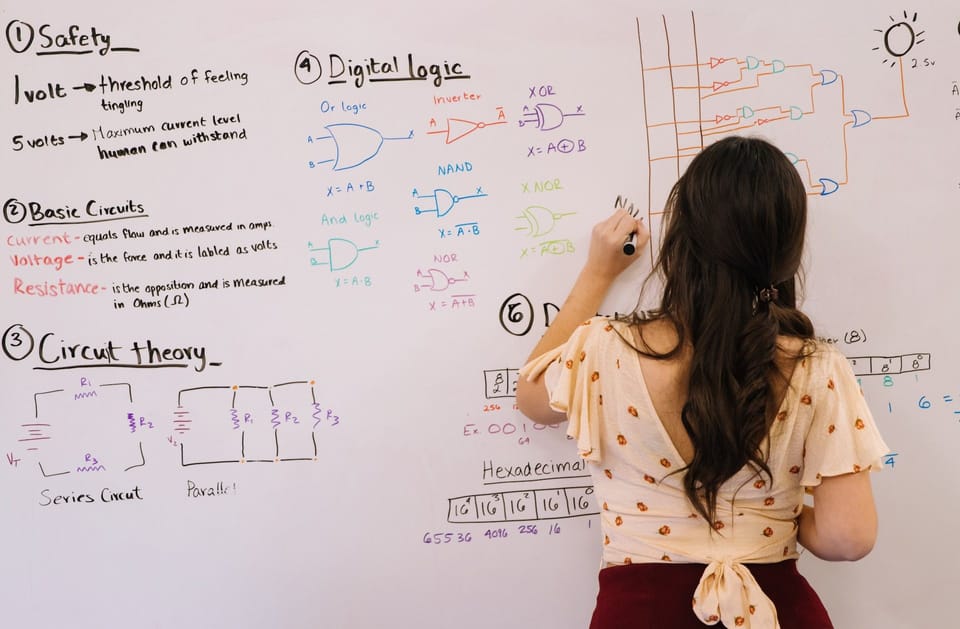

"Dependencies shouldn’t have been so tight, should have failed more gracefully, and we should have caught them"

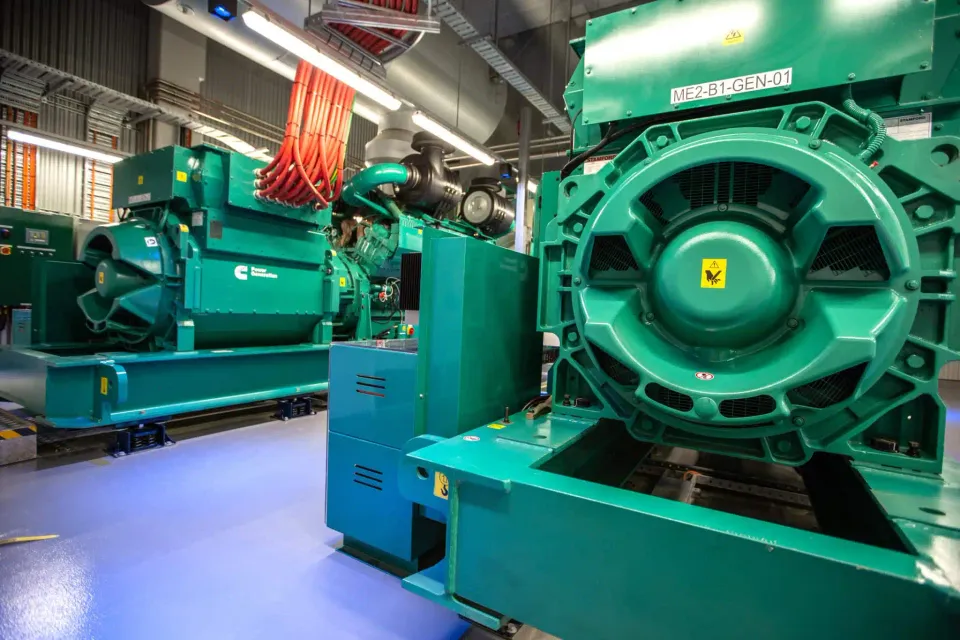

Upstream utility disturbance triggers brief bout of sweating with "a small amount of storage nodes" needing to be recovered manually in the wake of the Azure incident,

" our engineers have been focused on mitigating the impact of the delayed replication through changes made to replication subsystems, resource adjustments and other modifications"

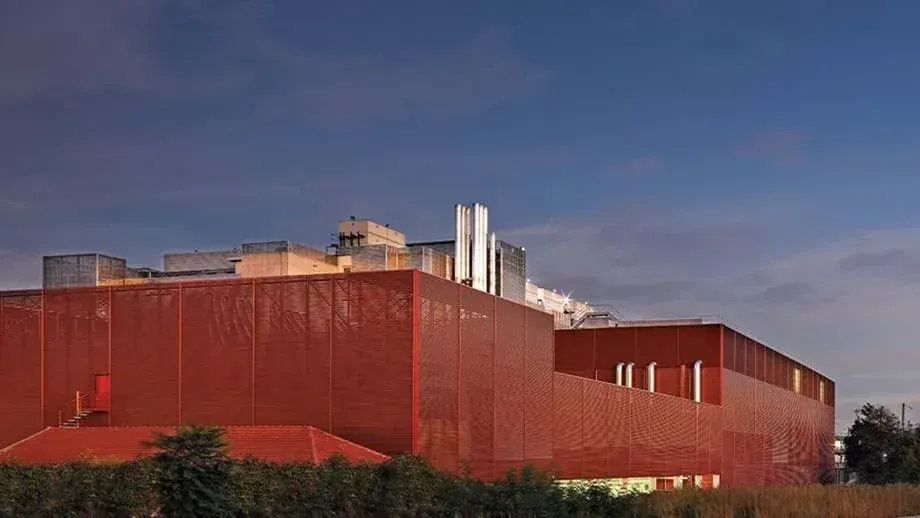

Fire-fighting was not helped by Global Switch’s fire suppression system “running out of water”. The incident also introduced water and soot contamination. Google Cloud’s affected racks had to be taken apart, thoroughly cleaned and reassembled before they could be restarted.

AWS admitted that “customers may also have experienced issues when attempting to initiate a Call or Chat to AWS Support” during the incident. What happened to recent architectural changes designed to avoid this?