Intel and the International Olympic Committee have trained up a retrieval-augmented generation (RAG) chatbot called Athlete365 that speaks six languages and is designed to help athletes cut through the organisational complexity that inevitably accompanies large events.

The bot is capable of handling athlete inquiries and interactions and will deliver on-demand information during their stay at the Olympic Village in Paris.

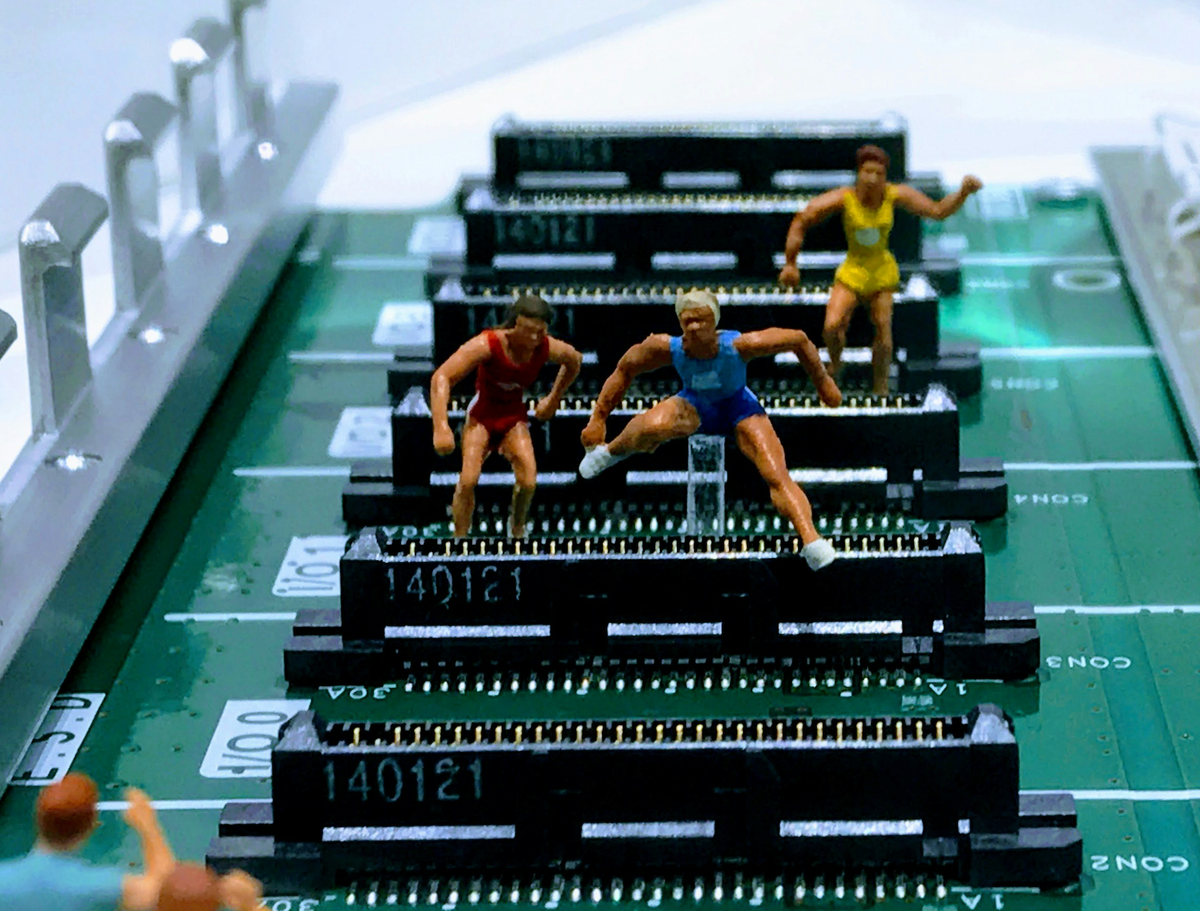

It offers a friendly RAG-powered front end, retrieving data behind the scenes so that athletes don't need to perform the mental gymnastics required to meaningfully interpret information from a large data set.

At an event to introduce the bot, Kaveh Mehrabi, a former professional badminton player and Director of the IOC’s Athletes’ Department, said Athlete365 was built to solve the major challenge of giving athletes easy access to information during the competition.

“When I was competing, I would argue that it was a bit easier because you just didn't have access to so much information," he said. "With digitisation comes more information. And as much as the information is good, it can also become overwhelming and sometimes even difficult to understand."

Athlete365 approaches the starting block with the ability to speak English, French, Spanish, Mandarin, Russian, and Arabic, which covers almost all of the athletes' first or second languages.

It was built on the standards and foundations set by the Open Platform for Enterprise AI (OPEA), incorporating OPEA-based microservice components into a RAG solution designed to deploy Xeon and Gaudi AI systems. Athlete365 is compatible with orchestration frameworks like Kubernetes and Red Hat OpenShift and provides standardised APIs with security and system telemetry.

Sign up for The Stack

Interviews, Insight, Intelligence for Digital Leaders

No spam. Unsubscribe anytime.

Read more: 'Enormous business potential': Microsoft on why GraphRAG outperforms naive RAG

Intel said its chatbot “breaks down proprietary walls with an open software stack.”

“Nearly all LLM development is based on the high abstraction framework PyTorch, which is supported by Intel Gaudi and Xeon technologies, making it easy to develop on Intel AI systems or platforms,” it wrote in a statement.

“Intel has worked with OPEA to develop an open software stack for RAG and LLM deployment optimised for the GenAI turnkey solution and built with PyTorch, Hugging Face serving libraries (TGI and TEI).”

It added: “OPEA offers open source, standardised, modular and heterogeneous RAG pipelines for enterprises, focusing on open model development and support for various compilers and toolchains. This foundation accelerates containerised AI integration and delivery for unique vertical use cases. OPEA unlocks new AI possibilities by creating a detailed, composable framework that stands at the forefront of technology stacks.”

At the launch event, Bill Pearson, Intel VP of Data Center and Artificial Intelligence, said the “takeaway for the enterprise” of its Olympics RAGathon was simply “data”.

“Enterprises have tons of data, which is often proprietary or historical data data but still needs to be put to use," he said. "LLMS are a great innovation, but don’t have access to that enterprise data.

"The technology and paradigm of RAG allows us to marry those two things together so [users can] ask questions, get the power of the LLM with the specificity and accuracy of enterprise data. At the same time, we can keep that enterprise data secure so it's not being shared with the world or with the LLM.”