Crowdstrike CEO apologises after content update "defect" caused global blue screen of death outage

"I don’t think it’s too early to call it: this will be the largest IT outage in history," celebrity cybersecurity specialist announces

See also our update of July 20 as Crowdstrike promises RCA, C++ null pointer blamed.

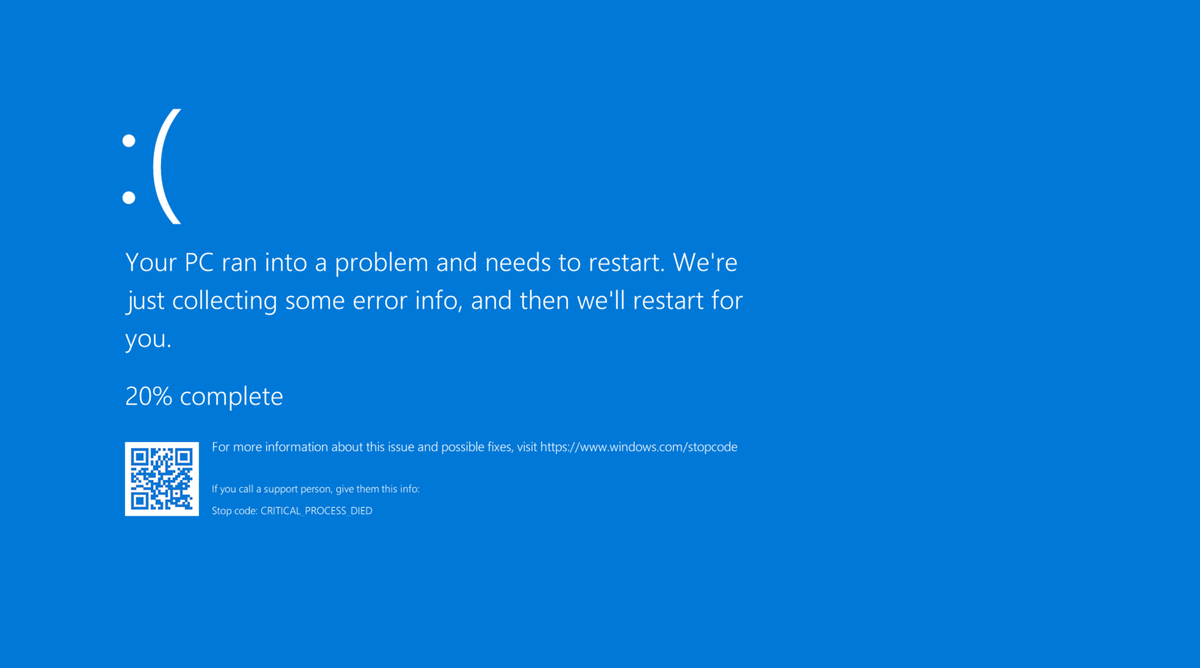

A Microsoft Windows 10 blue screen of death (#BYOD) outage of epic proportions has grounded planes, closed down supermarket checkouts and hobbled vast numbers of devices across the world, with Crowdstrike confirming a "defect" in an update is responsible for the problems.

In an incident that's being described as one of the biggest IT outages of all time, devices around the world became stuck in boot loop and refused to load.

The outage meant that Sky News was unable to broadcast this morning, whilst in the US major airlines reportedly kept their planes firmly on terra firma. Customers have been unable to pay at supermarket checkouts due to problems with Point of Sales devices (PoS).

George Kurtz, President & CEO CrowdStrike, has now apologised in an interview with The Today Show.

“We’re deeply sorry for the impact that we’ve caused to customers, to travelers, to anyone affected by this," he said.

The CEO also issued a statement on X which said: "CrowdStrike is actively working with customers impacted by a defect found in a single content update for Windows hosts. Mac and Linux hosts are not impacted. This is not a security incident or cyberattack. The issue has been identified, isolated and a fix has been deployed."

Crowdstrike added: "Our team is fully mobilized to ensure the security and stability of CrowdStrike customers."

EXCLUSIVE: CrowdStrike founder and CEO @George_Kurtz speaks on TODAY about the major computer outages worldwide that started earlier today: “We’re deeply sorry for the impact that we’ve caused to customers, to travelers, to anyone affected by this.” pic.twitter.com/fWz6KhgrcZ

— TODAY (@TODAYshow) July 19, 2024

As the world rushed to fix the problems caused by the defect, AWS warned of "reboots of Windows Instances, Windows Workspaces and Appstream Applications related to a recent update to the Crowdstrike agent (csagent.sys), which is resulting in a stop error (BSOD) within the Windows operating system."

It wrote: "Some customers are seeing success with a reboot of their EC2 instance. CrowdStrike have deployed an update that will replace the '*.sys' file during a reboot with no need to Safe Mode or the detachment of the root volume."

It has been alleged that the outage was caused by an update to Crowdstrike Falcon.

As the story of the outage broke across the world, Troy Hunt, creator of @haveibeenpwned, wrote on X: "I don’t think it’s too early to call it: this will be the largest IT outage in history."

How did a Crowdstrike update cause the BSOD outage?

Cybersecurity researcher Kevin Beaumont has obtained copies of the .sys driver files used by Crowdstrike customers.

"The .sys files causing the issue are channel update files, they cause the top level CS driver to crash as they're invalidly formatted," he claimed. "It's unclear how/why Crowdstrike delivered the files and I'd pause all Crowdstrike updates temporarily until they can explain.

"This is going to turn out to be the biggest 'cyber' incident ever in terms of impact, just a spoiler, as recovery is so difficult."

He also shared the following warning: "I'm seeing people posting scripts for automated recovery. Scripts don't work if the machine won't boot (it causes instant BSOD) – you still need to manually boot the system in safe mode, get through BitLocker recovery (needs per system key), then execute anything.

"Crowdstrike are huge, at a global scale that's going to take.. some time."

On Reddit, users shared an early advisory from Crowdstrike, which said: "Hello everyone - We have widespread reports of BSODs on windows hosts, occurring on multiple sensor versions. Investigating cause. TA [tech alert] will be published shortly."

"CrowdStrike Engineering has identified a content deployment related to this issue and reverted those changes," the advisory added. It included this link to Crowdstrike's support portal.

Beneath that post, another forum user wrote: "I mean, you can't say it's not protecting you from malware if your entire system and servers are down."

Here is the fix put out by #Crowdstrike to resolve the global IT outage, if you’re a customer. pic.twitter.com/QCKSCRQ1uV

— Hugh Riminton (@hughriminton) July 19, 2024

Screenshots of what is claimed to be a Crowdstrike announcement about a fix are now circulating on social media (such as the post shared above).

"Crowdstrike is aware of reports of crashes on Windows hosts related to the Falcon Sensor," it said.

"Symptoms include hosts experiencing a black/ blue screen error."

It added: "Crowdstrike engineering has identified a content deployment related to this issue and reverted those changes."

The screenshot shared the following workaround (which was also included in the Reddit thread mentioned earlier):

1. Boot Windows into Safe Mode or WRE.

2. Go to C:\Windows\System32\drivers\CrowdStrike

3. Locate and delete file matching "C-00000291*.sys"

4. Boot normally.

An X account describing itself as belonging to Brody N, Director of Threat Hunting Operations at CrowdStrike, described the outage as "a mess."

"There is a faulty channel file, so not quite an update," he wrote.

"There is a fix of sorts so some devices in between BSODs should pick up the new channel file and remain stable," the account added.

Throughout the day, forum folks and social media users shared their own nightmare stories about the outage. We expect remediation work to continue for some time, so for many people, the story is just beginning.

"We lost over 960 instances in the datacenter," one user wrote. "Workstations across the globe lost. The recovery for staff workstations is going to be insane.

"Just had 160 servers all BSOD," another said. "This is NOT going to be a fun evening."

90% of my local Woolworths registers had the #BSOD. Queues a mile long, absolute chaos. National outage? Local? pic.twitter.com/IuztPMfaQw

— BYoung 🇦🇺 (@MrBMYoung) July 19, 2024

If you make windows update today this what will happen #BSOD pic.twitter.com/mpso1WDA4z

— Alzain (@Alzain55M) July 19, 2024

⚠️ We are currently experiencing widespread IT issues across our entire network. Our IT teams are actively investigating to determine the root cause of the problem.

— Thameslink (@TLRailUK) July 19, 2024

We are unable to access driver diagrams at certain locations, leading to potential short-notice cancellations,…

The Microsoft / CrowdStrike outage has taken down most airports in India. I got my first hand-written boarding pass today 😅 pic.twitter.com/xsdnq1Pgjr

— Akshay Kothari (@akothari) July 19, 2024

On X, a security engineer joked that the company responsible for the outage was "declaring an early weekend by taking out half the world’s systems."

"Even ransomware isn’t this effective," they joked.

"For years to come, the IT admin team will bring this up whenever you ask them to install another agent on the endpoints," Florian Roth, Head of Research at Nextron Systems, told his 184,100 followers.

Industry reactions to the outage

Speaking to The Stack, Omer Grossman, CIO at identity security company CyberArk, claimed: "The current event appears – even in July – that it will be one of the most significant of cyber issues of 2024. The damage to business processes at the global level is dramatic. The glitch is due to a software update of CrowdStrike's EDR product. This is a product that runs with high privileges that protects endpoints. A malfunction in this can, as we are seeing in the current incident, cause the operating system to crash."

Discussing the possible causes of the issue, Grossman speculated: "The range of possibilities ranges from human error - for instance a developer who downloaded an update without sufficient quality control - to the complex and intriguing scenario of a deep cyberattack, prepared ahead of time and involving an attacker activating a 'doomsday command' or 'kill switch'."

Mark Lloyd, Business Unit Manager at Axians UK, added: "This outage is a stark reminder of how dependent the world is on cloud services. From productivity tools to critical infrastructure, a large chunk of technology runs on cloud platforms. This outage showcases the immense power and reach these services hold.

"Even the biggest tech giants are not immune to disruptions, and the need for robust redundancy and disaster recovery plans across the board are more critical than ever in this day and age."