Azure

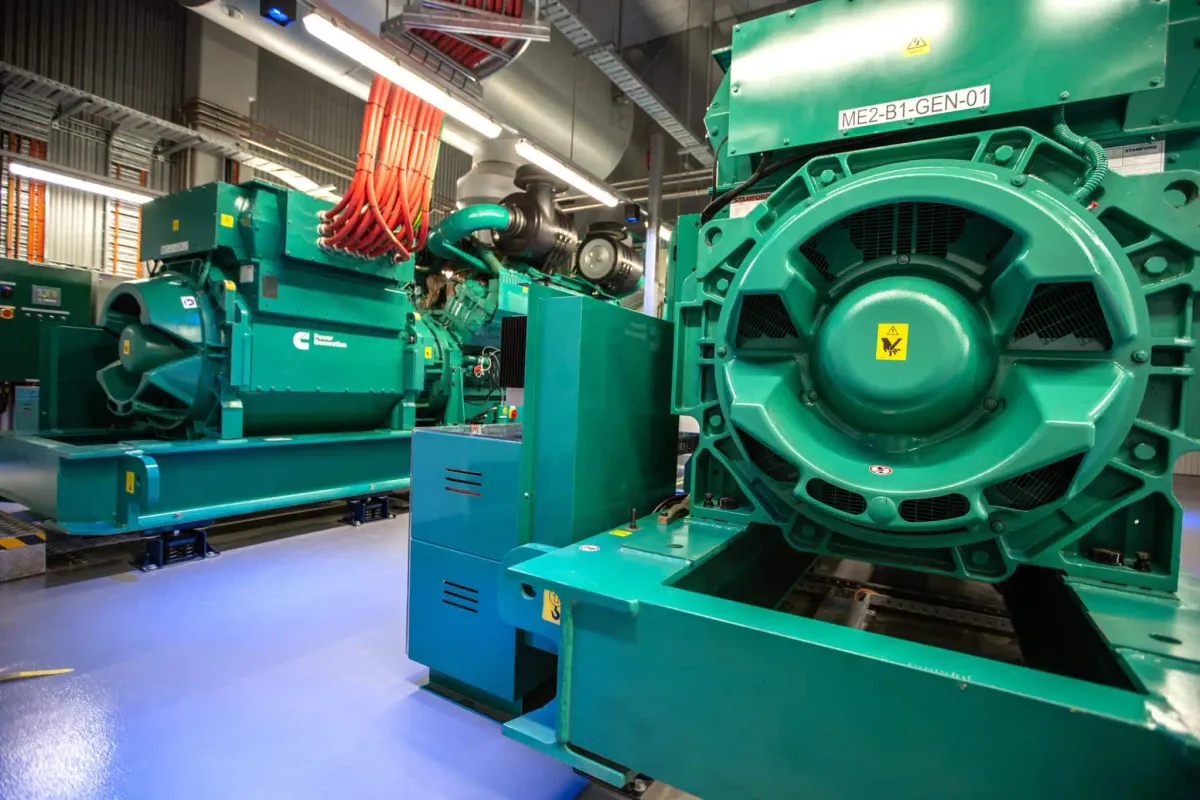

In your weekly reminder that a) cloud services are not immune to borkage and b) uninterruptible power supply is a great misnomer, Azure has blamed an incident affecting one of its three West Europe Availability Zones (Netherlands) on “upstream utility disturbance” and subsequent failure to fully failover to generator power supply.

The incident affected some customers using Virtual Machines, Storage, App Service, SQL DB, Cosmos DB and other services in the AZ. Recovery was largely complete in under two hours although “a small amount of storage nodes needs to be recovered manually, leading to delays in recovery for some services and customers,” Azure said today.

“We are working to recover these nodes and will continue to communicate to these impacted customers directly via the Service Health blade in the Azure Portal,” an Azure status update noted.

The incident comes days after AWS also suffered an incident in US-EAST-1 that left it processing a “backlog” of S3 objects.

(An earlier June 2023 incident also in US-EAST-1 has been attributed to a “latent software defect” triggered after its Lambda Frontend fleet, while adding additional compute capacity to handle an increase in service traffic, “crossed a capacity threshold that had previously never been reached within a single cell” – multiple subsystems to serve function invocations for customer code – a post-mortem shows.)

Last week meanwhile DBS and Citibank banking services in Singapore were briefly knocked offline due to an outage at an Equinix data center.

Whilst cloud hyperscalers generally manage enviable uptime and take the pain out of managing such outages away from enterprise IT leaders, the sheer scale of demand being placed on their infrastructure as well as power-related incidents does periodically cause issues.